TurtleBrains development began with OpenGL as it should help allow TurtleBrains to remain fairly platform independent, at least across personal computers; Windows, Mac OS X and Linux, the first batch of target platforms. At the time I did not realize I was using deprecated functions, Legacy OpenGL. It would seem the fixed-function pipeline and several states are now obsolete, so with a lot of help from @neilogd, the legacy code was converted to OpenGL 3.2 Core which should help reduce driver bugs and increase portability.

I decided to make this change while TurtleBrains only had rendering for Sprites and Text objects. Soon I hope to add shapes, a tile system, a particle system and possibly other graphical objects. It makes sense to do the conversion before adding those objects to reduce efforts, so this is the story of converting to OpenGL 3.2 Core.

First I started with a basic tutorial from www.opengl.org/wiki/Tutorials to get a grip on some shader basics. I’ve used them a few times but not enough to implement from memory, so the refresher helped get things going. With that working I made a define that could toggle TurtleBrains to compile between using legacy mode and the core mode so I could easily compare the builds.

The first challenges

In Legacy mode the projection matrix is easily created by glOrtho(), and that is not the case anymore, so I looked up some documentation on how that matrix was built and got my own working, with some slight testing as I needed to send the matrix down transposed. I tried separating it into smaller steps so I could deal with any problems without blindly guessing. Very thankful I went with this approach.

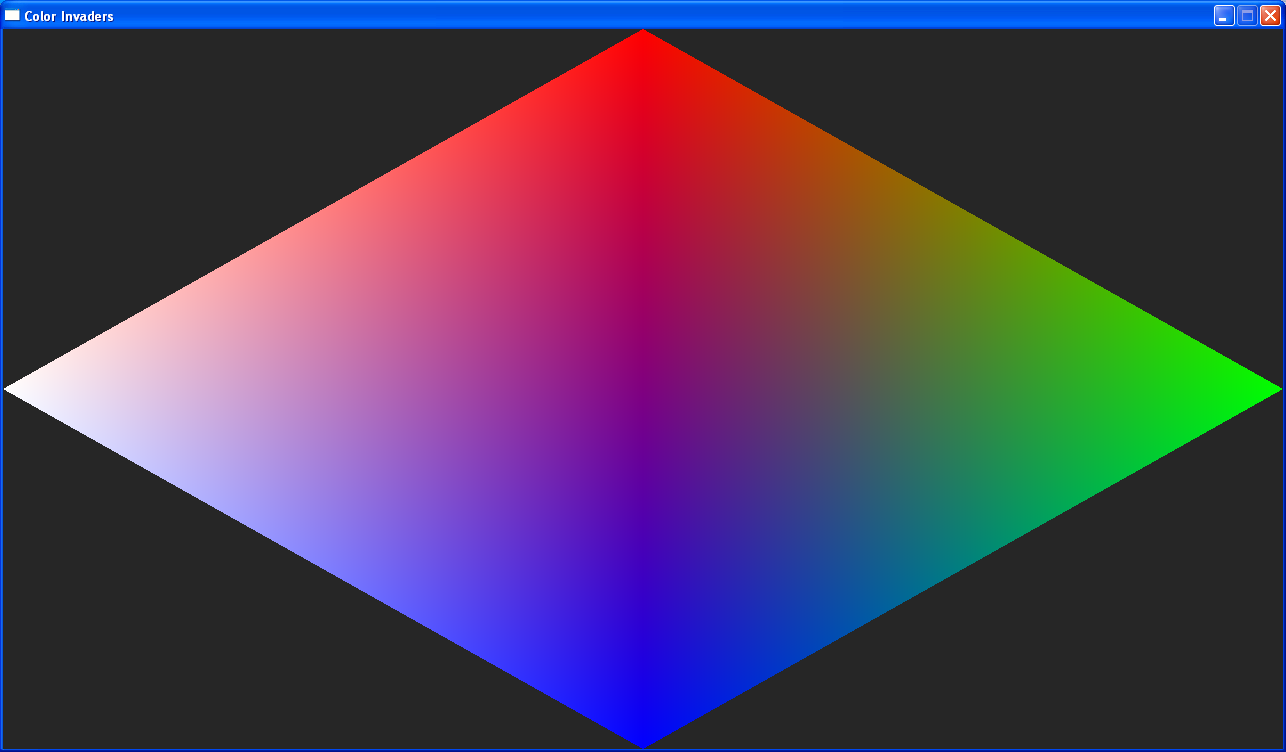

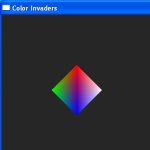

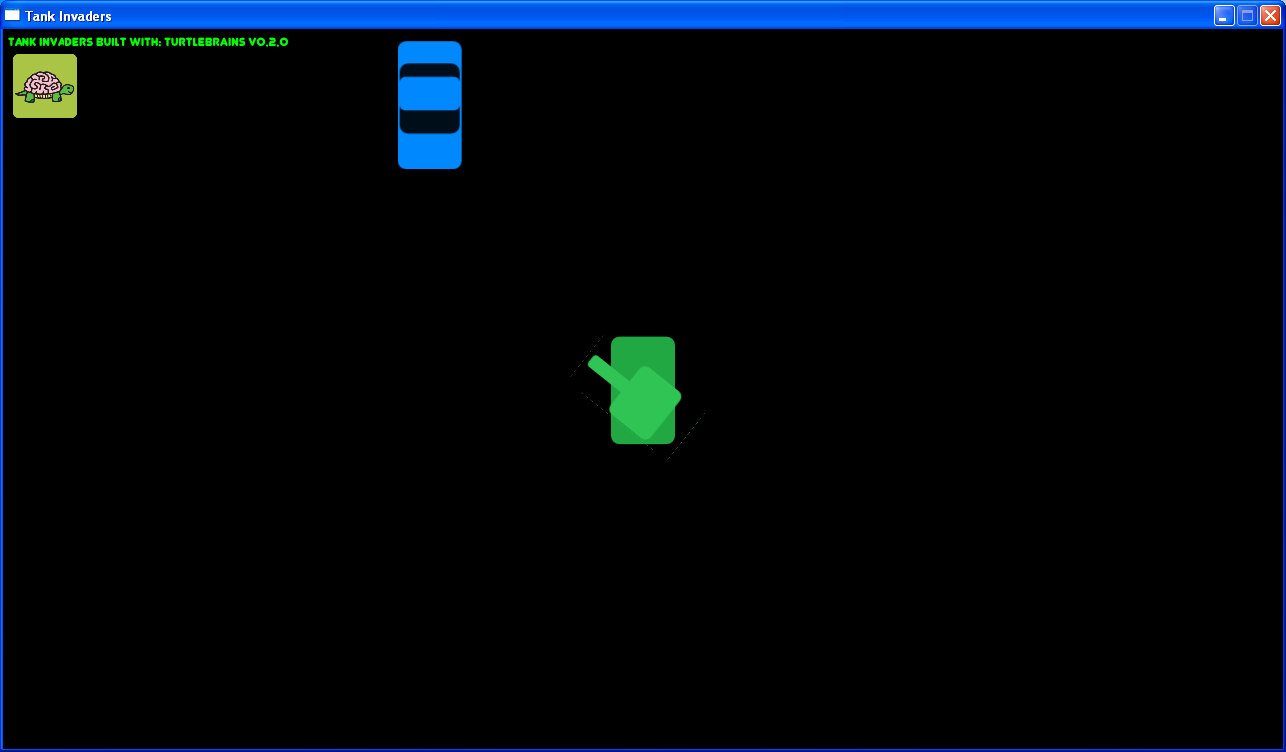

Once the matrices were setup I aimed to get the view to look like Figure 1, which was the output of the tutorial. I did this by changing the rendering path of the sprites and text and each of those objects would then render that shape for the time being. I got the shaders initialized in TurtleBrains and setup the matrices and then started the new RenderQuad() function. In no particular order these are some of the views I ended up with!

The first ones were before I was passing vertex colors correctly or handling them in the fragment shader, eventually that got sorted out, but the projection matrix was way off. Or, so I thought, this problem took two nights of personal development time to get sorted out, and late on night two I realized the problem was with the vertex data being sent down the pipe.

//Initial working vertex data for figure 1, with 'identity like' projection matrix.

verts[] = { { 0, 1, data }, { 1, 0, data }, { 0, -1, data }, { -1, 0, data } }

//Wrong vertex data was being passed with 1280x720 ortho projection matrix.

verts[] = { { 0, 200, data }, { 200, 0, data }, { 0, 100, data }, {100, 0, data } }

//Correct vertex data (after I found the isssue) for the 1280x720 ortho projection.

verts[] = { { 150, 200, data }, { 200, 150, data }, { 150, 100, data }, {100, 150, data } }

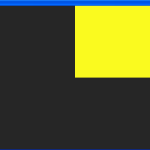

The problem was the shape of the diamond threw me off and I never moved the ‘center’ points for each vertex. Which was fine for the initial because 0 is halfway between -1 and 1, but not between 100 and 200, so a diamond is not the actual shape despite me ‘expecting’ it to be. After finally solving that issue I disabled sprite rendering and was able to see squares for each text object:

(Figure 3. Text objects rendering with position added to verts)

Where is the colors

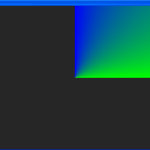

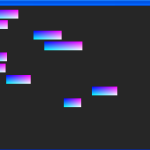

That was as much that could be completed on the second day of this conversion process. The following day I would change the vertex colors from float r,g,b to a 32bit unsigned abgr – would have preferred argb, but seems it goes down as abgr – and got the texture coordinates tested out, which resulted in a pretty neat looking text objects (see Figure 4) as I used the texture coordinates to modify the color within the shader to prove they worked. Shaders can be pretty nice that way.

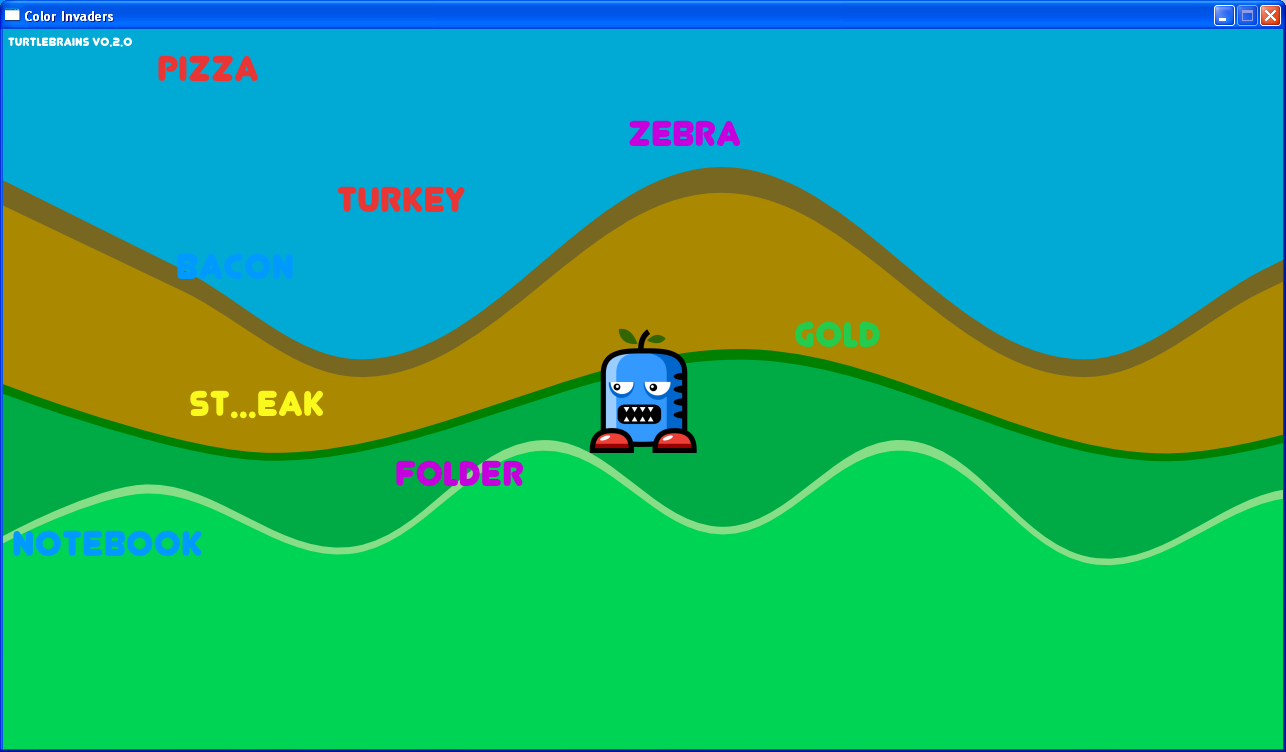

With the texture coordinates working it was time to get a texture sampler and it appears glBindTexture() is still used but glActiveTexture() was needed. The text showed black at first, see Figure 5, as I had some issue in loading the texture. The text texture was a special case as TurtleBrains loaded an 8bit “alpha” texture which is “unsupported” in Open 3.2 Core. So I skipped on to sprites, disabling text for the moment and immediately got the results shown in Figure 6, the sprites rendering without alpha correctly blending.

Luckily alpha blending was done exactly the same as before in Legacy OpenGL so I just needed to glEnable(GL_BLEND) and glBlendFunc(GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA) and also the same with depth testing: glEnable(GL_DEPTH_TEST) and glDepthFunc(GL_LEQUAL), which produced the correct results in Figure 7 below. Feeling like I’m finally getting somewhere! (For those with a keen eye, you probable noticed I’m not passing down the texture coordinates from my sprite instead just 0,1’s for now).

Getting text to work again

So back to getting the text working. The 8bit alpha texture is not actually unsupported, just done differently, when generating the texture with glGenImage2d() the format is GL_RED instead of GL_ALPHA and it is up to the shader to handle this. Since I haven’t yet decided to support different shaders in TurtleBrains and plan to have this basic one, the decision to pass a colorMatrix uniform to the shader was made. This would be the identity matrix to convert an ARGB to ARGB or it would be modified to convert R to fill out each ARGB channel.

This got the text texture loaded and working, Figure 8a and with the colorMatrix Figure 8b was the result. The vertex color change was finally needed for the text colors to work, so I modified the shader to get that working and with a few oddities (Figure 8c), finally got to (Figure 8d). But when looking closely at that image you can see a black outline around the text. neilogd explained this was from the colorMatrix and how the text texture was loaded, essentially as the “alpha” channel went down black became apparent instead of white, so I added a colorTint to modify this back.

This took a few iterations in the fragment shader to implement correctly as you can see in Figure 8e and figure 8f is close to the right colors but not correct as shown below:

Not quite finished yet

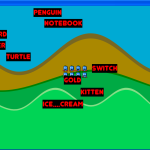

That allowed that project to work, however I needed to extract the adding of the positions to the quads for the sprites and text rendering, this was to be done with a freshly build matrix stack that would resemble parts of the Legacy OpenGL stack, glPushMatrix(), glTranslate(), glRotate() etc. This was noticed because in the original project the text was supposed to sink behind the lowest grass in that image, so Notebook would not be visible, and Folder would only be partially visible.

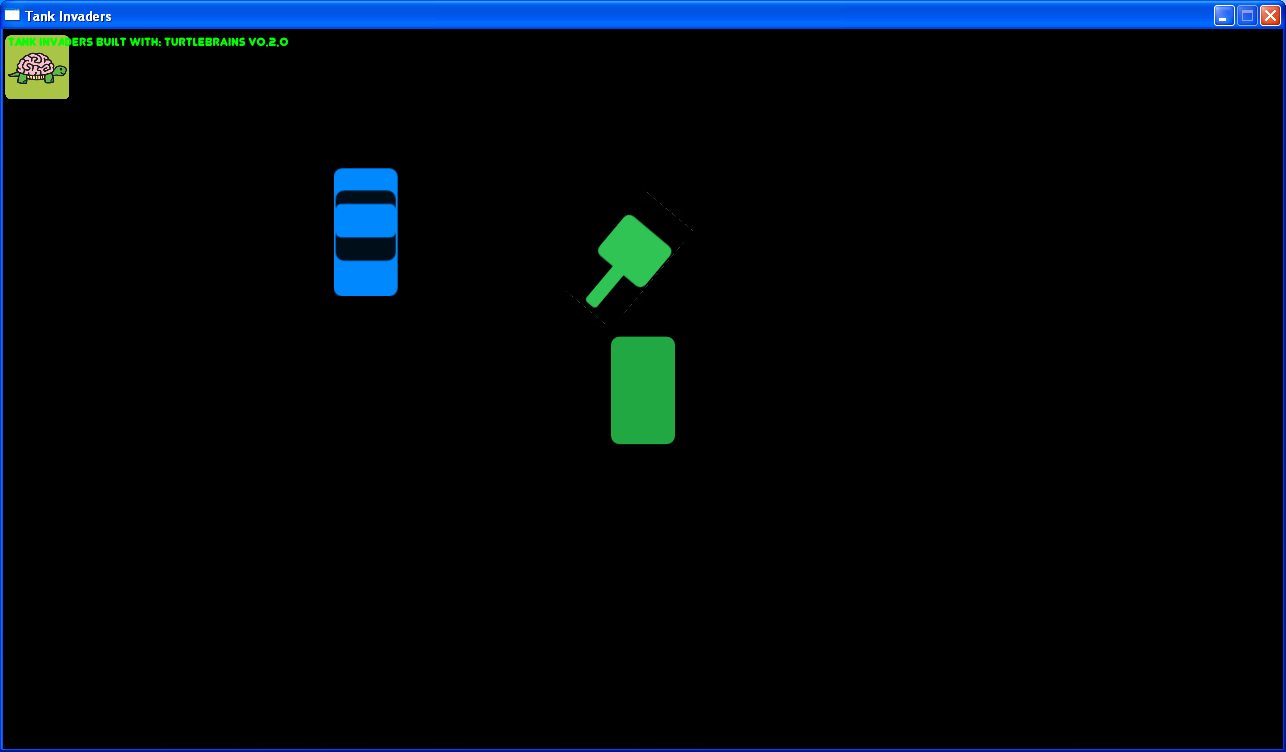

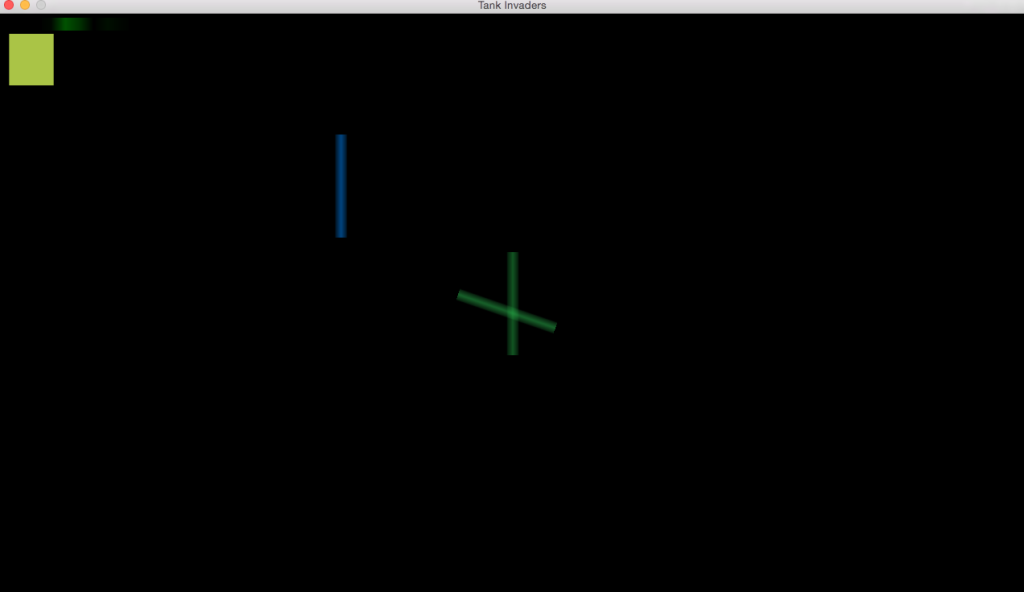

It was relatively straight forward to get the modelView matrix to support the translation needed for this example project, but I had to move to a different project to test push/pop and rotation/scaling.

The tanks turret should not be rotating around the top left position of the tank/turret sprite like it does in the above shot. It took a few hours one night and part of the following night to get the TurtleBrains rotate function working as glRotate did, the problem turned out to be the order of my matrix multiplication in Translate. Instead of A * B I needed B * A, I had tried swapping this back and forth in rotate, but didn’t realize the problem was with the translation that occurred after the rotation until I did printed out the matrix at each step and followed along.

One final push

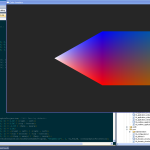

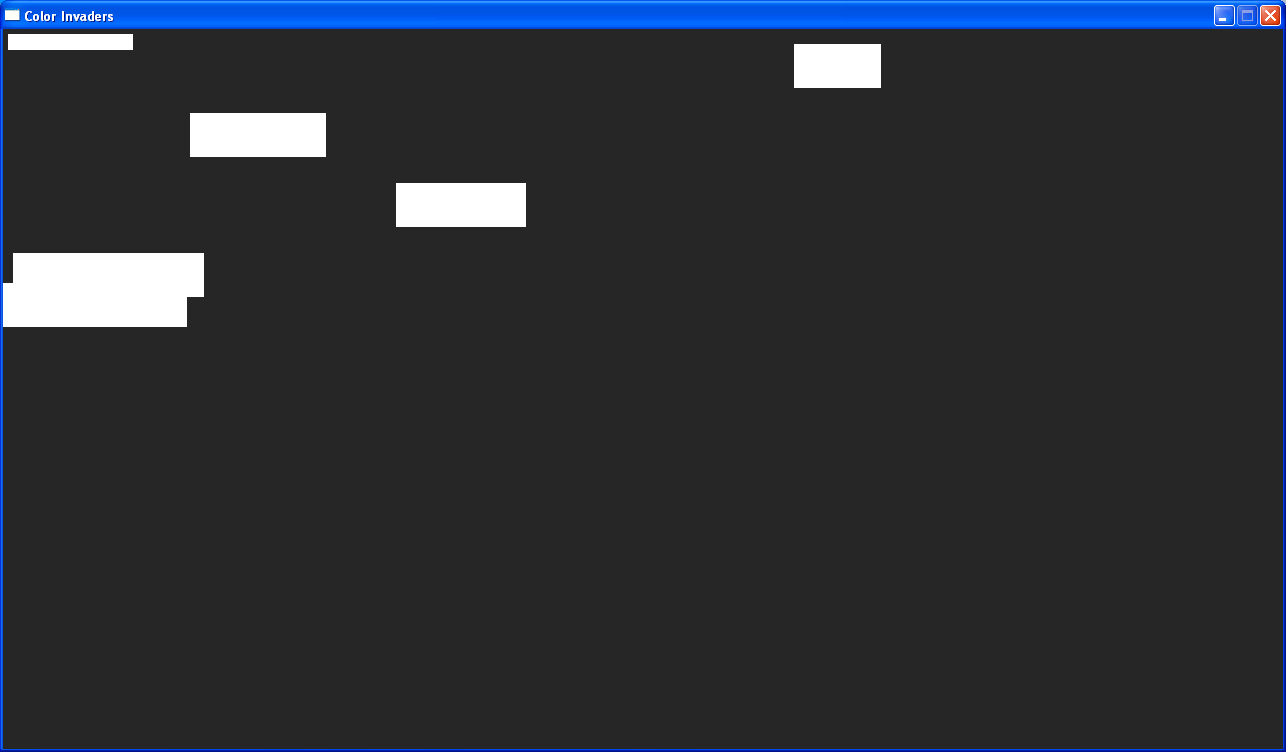

That did not quite complete the conversion from Legacy OpenGL to OpenGL 3.2 Core. First the mess needed to be cleaned up, prepped for TurtleBrains quality and error checking to be added. Also I still needed to test on Mac OS X but why would there be any issues there now that I’m following a more strict “core” OpenGL functionality. How wrong could that be:

Clearly something went horribly wrong, there must be some state that was not the same as it was on Windows. Since error checking needed to be added and would likely show me what went wrong, I created a macro to turn it on/off depending on debug/release builds and started checking for errors after each glCall. I found and fixed a few minor issues that likely also occurred on Windows. But nothing changed, loaded the other test project and saw the following:

This was quite shocking and after I finished checking errors after all glCalls I stopped for a moment to just look at that image, Figure 12b, and paused. Was suddenly hit with one of those rare moments of the light-bulb turning on. If you haven’t experienced this, keep solving problems because it is amazing when it happens. It occurred to me by looking at Figure 12b that the texture coordinates were not being read correctly, either both were always 0, or at least one of them was always 0, and then immediately the second lightbulb went off.

In my rush through creating the Vertex2DÂ structure I allowed a very bad habit to flow through my fingers. The struct was:

struct Vertex2D {

float x, y;

unsigned long abgr;

float u, v;

};

Yes, that is correct abgr should be 32 bits, and unsigned long can vary depending on the platform, on my Windows machine it happened to be 32bits, and on Mac OS X, 64bits. Changing this type from unsigned long to uint32_t solves the problems regardless of the platform, and is something I need to pay much closer attention to for future platform independence.

If you’ve read this far I congratulate you and hope you’ve learned something from my mistakes and process. I feel much better going forward knowing that future rendering in TurtleBrains will be compliant with OpenGL 3.2 Core and thus being less buggy with different hardware and drivers and allow me to port to other devices easier. Strive for code quality.